I wanted a way to quickly spin different VMs up and down on my KVM dev box, to help with testing things like OpenStack, Swift, Ceph and Kubernetes. Some of my requirements were as follows:

- Define everything in a markup language, like YAML

- Manage VMs (define, stop, start, destroy and undefine) and apply settings as a group or individually

- Support different settings for each VMs, like disks, memory, CPU, etc

- Support multiple drives and types, including Virtio, SCSI, SATA and NVMe

- Create users and set root passwords

- Manage networks (create, delete) and which VMs go on them

- Mix and match Linux distros and releases

- Use existing cloud images from distros

- Manage access to the VMs including DNS/hosts resolution and SSH keys

- Have a good set of defaults so it would work out of the box

- Potentially support other architectures (like ppc64le or arm)

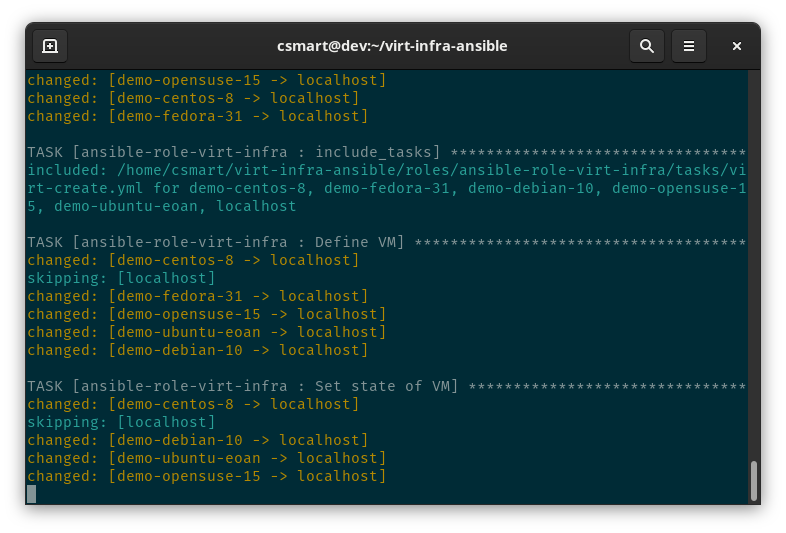

So I hacked together an Ansible role and example playbook. Setting guest states to running, shutdown, destroyed or undefined (to delete and clean up) are supported. It will also manage multiple libvirt networks and guests can have different specs as well as multiple disks of different types (SCSI, SATA, Virtio, NVMe). With Ansible’s –limit option, any individual guest, a hostgroup of guests, or even a mix can be managed.

Although Terraform with libvirt support is potentially a good solution, by using Ansible I can use that same inventory to further manage the guests and I’ve also been able to configure the KVM host itself. All that’s really needed is a Linux host capable of running KVM, some guest images and a basic inventory. The Ansible will do the rest (on supported distros).

The README is quite detailed, so I won’t repeat all of that here. The sample playbook comes with some example inventories, such as this simple one for spinning up three CentOS hosts (and using defaults).

simple:

hosts:

centos-simple-[0:2]:

ansible_python_interpreter: /usr/bin/python

This can be executed like so.

curl -O https://cloud.centos.org/centos/7/images/CentOS-7-x86_64-GenericCloud.qcow2 sudo mv -iv CentOS-7-x86_64-GenericCloud.qcow2 /var/lib/libvirt/images/ git clone --recursive https://github.com/csmart/virt-infra-ansible.git cd virt-infra-ansible ansible-playbook --limit kvmhost,simple ./virt-infra.yml

There is also a more detailed example inventory that uses multiple distros and custom settings for the guests.

So far this has been very handy!

9 thoughts on “Using Ansible to define and manage KVM guests and networks with YAML inventories”

Have you tried kcli? https://github.com/karmab/kcli/

I think the only missing part would be the “ppc64le or arm” part, but it might be just to enable copr builds for those archs.

Didn’t know about it, but it looks interesting! Thanks.

Do you have the link the ansible-galaxy page?

Hi Vinay, yes it’s here https://galaxy.ansible.com/csmart/virt_infra

Thanks

How would you handle the relation between physical host and vm? I inherited a hostvar file that lists the vms as hostvar variable.

So I’m trying to say for the vms that belong to inventory_hostname, run a playbook that generates and netinstall(kickstarted ) bootable iso for each one.

eg

hosts: kvm_host

for mv in kvm_host.vms run role “generate_bootable_iso”

the role is design to create an iso for the inventory_hostname, but that won’t work for me since inventory_hostname is the physical host of the vm’s.

Can you post an example of the inventory and any playbooks you’re running?

Is there a way to control how big the actual /boot partition is once the VM is created? I keep getting /boot that is 1GB when deploying Fedora36, and cannot figure out how to control the size to make it like 4 GB so more than 2 kernels can be stored. Any advice is appreciated, this role is relaly useful for some of the work that I do.

Hi Corey, sorry I missed this comment. There is no way to do what you want with my role, as the 1GB /boot partition is created and set that way in the disk image by Fedora. You would have to use a tool like `gparted` to move your partitions around and use a custom image – or create your own cloud image from scratch and use that instead…